12 Oct 2024

When working with large datasets in Elasticsearch, efficient pagination becomes crucial for maintaining performance. The search_after parameter offers a solution by allowing you to paginate through results based on the sorting values of the last document from the previous page. In this post, we’ll explore how to implement search_after pagination using the Elasticsearch-Rails gem.

Why Use search_after?

Traditional pagination using from and size parameters can become inefficient for deep pages, as Elasticsearch needs to calculate and skip over a large number of documents. search_after provides a more scalable approach by using the sort values of the last seen document as a search marker.

Implementation Steps

1. Set Up Your Model

First, ensure your model is set up with Elasticsearch:

class Product < ApplicationRecord

include Elasticsearch::Model

include Elasticsearch::Model::Callbacks

# Define your index settings and mappings here

end

2. Define a Custom Search Method

Create a class method in your model to handle the search_after logic:

class Product < ApplicationRecord

# ...

def self.search_with_after(query, sort_field, search_after = nil, size = 20)

search_definition = {

query: query,

sort: [

{ sort_field => { order: 'asc' } },

{ id: { order: 'asc' } } # Tie-breaker

],

size: size

}

search_definition[:search_after] = search_after if search_after

__elasticsearch__.search(search_definition)

end

end

In your controller or service object, perform the initial search:

query = { match: { name: 'example' } }

results = Product.search_with_after(query, :created_at)

@products = results.records

@last_sort_values = results.records.last&.slice(:created_at, :id)&.values

In your view, add a “Next Page” link that includes the last sort values:

<% @products.each do |product| %>

<!-- Display product information -->

<% end %>

<% if @last_sort_values %>

<%= link_to "Next Page", products_path(search_after: @last_sort_values.to_json) %>

<% end %>

5. Handle Subsequent Searches

In your controller, handle the search_after parameter for subsequent pages:

class ProductsController < ApplicationController

def index

query = { match: { name: params[:q] } }

search_after = JSON.parse(params[:search_after]) if params[:search_after]

results = Product.search_with_after(query, :created_at, search_after)

@products = results.records

@last_sort_values = results.records.last&.slice(:created_at, :id)&.values

end

end

Considerations and Best Practices

- Consistent Sorting: Ensure your sort fields are consistent across searches to maintain proper pagination.

- Tie-breakers: Always include a tie-breaker field (like

id) in your sort to handle documents with identical sort values.

- Statelessness:

search_after is stateless, making it suitable for scenarios where users might open multiple browser tabs or share links.

- Performance: While more efficient than deep offset pagination, be mindful of very large result sets and consider implementing reasonable limits.

11 Oct 2024

If you’re a longtime Vim user like me, you’ve probably used the CtrlP plugin at some point in your workflow. One of my favorite features in CtrlP was being able to hit <C-p> twice and instantly resume the last fuzzy search.

This simple trick saved me from having to repeat the same query again and again.

Recently, while transitioning to Neovim, I started using Telescope, which offers a more powerful and extensible fuzzy finder.

But one feature I was really missing from CtrlP was the ability to resume the last search without retyping it.

Resuming Your Last Search with Telescope

While this feature is mentioned in the Github Docs under the Vim Pickers section, there is no default keybinding for it, making it easy to overlook.

Telescope’s resume feature allows you to pull up your last search exactly where you left off. This is especially useful when you’ve closed the fuzzy finder, but want to bring it back with all the previous search context.

Here’s how you can map the resume command to a shortcut key:

vim.keymap.set('n', '<leader>tr', '<cmd>Telescope resume<CR>', { noremap = true, silent = true })

Now, with <leader>tr, I can resume my last Telescope search instantly, just like I used to do with CtrlP by hitting <C-p> twice.

Use Cases for Telescope resume

There are several moments when resume really shines:

- Switching between buffers: Maybe you started a fuzzy search for a file, got distracted by something else, and need to get back to it quickly.

- Live grep continuation: If you were performing a project-wide search using

live_grep and closed the picker, you can immediately resume and continue refining your search.

- Navigating between recently opened files: If you were exploring multiple files and closed the search prematurely,

resume will restore that exact file list.

Sending Telescope Results to Quickfix List

Another powerful feature in Telescope that enhances your workflow is the ability to send your current search results to a quickfix list. This is particularly useful when you want to work with multiple results from your search.

By default, you can use <C-q> while in a Telescope picker to send all the current results to the quickfix list. This works for any Telescope picker, including file search, grep results, and more.

Here are some benefits of using this feature:

- Persistent Results: The quickfix list persists even after you close the Telescope window, allowing you to refer back to your search results later.

- Batch Operations: You can perform operations on multiple files in the quickfix list, such as search and replace across all results.

- Navigation: Quickly jump between search results using quickfix navigation commands.

To use this feature:

- Open any Telescope picker (e.g.,

<leader>ff for file search or <leader>fg for live grep)

- Perform your search as usual

- Press

<C-q> to send the current results to the quickfix list

- Close the Telescope window (optional)

- Use

:copen to open the quickfix window and navigate through your results

10 Oct 2024

As a developer, you often switch between branches or commits while working on multiple tasks, testing out code, or reviewing changes. One handy Git command that can help speed up this process is git checkout -. This command acts like a toggle, letting you jump back and forth between your current branch or commit and the last one you were on. Let’s explore a good use case for this feature and why it can be so useful in your daily workflow.

What is git checkout -?

In Git, git checkout - is shorthand for checking out the previously checked-out reference, whether that reference is a branch, commit, or tag. It allows you to quickly toggle between your current location and the one you just left, no matter if it’s a branch or a specific commit.

Example Scenario: Reviewing and Hotfixing

Let’s say you’re working on a feature branch, feature/new-onboarding-flow, and you’re in the middle of implementing some new functionality. Suddenly, you receive an urgent request to fix a bug in production.

Here’s how git checkout - can come in handy:

Step 1: Switch to the main Branch for the Hotfix

You need to fix the bug, so you quickly switch from your feature branch to the main branch:

Now you’re on the main branch, where you can apply the necessary hotfix.

Step 2: Make the Hotfix and Push the Changes

You make the changes, commit the fix, and push it to the remote repository:

git commit -m "Fix critical bug in production"

git push origin main

Step 3: Switch Back to Your Feature Branch

After the fix is done, you want to quickly get back to working on your feature. Instead of typing the branch name again or checking the logs for where you were, you can simply run:

This command takes you back to the feature/new-onboarding-flow branch where you left off, allowing you to continue right where you were without extra steps.

Step 4: Toggle Back Again

Need to check something else on the main branch again? Just run git checkout - once more to toggle back, and you’re instantly switched to main.

Handling Uncommitted Changes

While git checkout - is a powerful shortcut, you need to be mindful of uncommitted changes when using it. If you have uncommitted changes, Git will do one of two things:

-

If there are no conflicts: Git will apply your uncommitted changes when switching between branches or commits. This can be convenient when you want to keep working on something across branches.

-

If there are conflicts: Git will prevent the switch and display an error message like:

error: Your local changes to the following files would be overwritten by checkout:

<file-name>

Please commit your changes or stash them before you switch branches.

In this case, you’ll need to either commit your changes, discard them, or stash them using git stash.

Using git stash to Save Uncommitted Changes

If you want to switch branches without losing your current work, you can use git stash to temporarily save your uncommitted changes, then switch branches, and later reapply the changes.

Here’s how to do it:

git stash # save your uncommitted changes

git checkout - # switch back to the previous branch

git stash pop # reapply your changes

This workflow ensures that you can quickly move between branches or commits without losing your in-progress work.

Toggling Between Branches and Commits

Let’s say before fixing the bug, you briefly switched to an older commit to test some behavior:

git checkout <commit-hash>

After testing the old commit, running git checkout - will take you right back to the branch you were working on. This flexibility to switch between branches and specific commits makes git checkout - particularly useful during testing or debugging sessions.

Why Use git checkout -?

1. Speed:

When you’re working on multiple branches or need to switch between a branch and a specific commit, typing git checkout - is much faster than remembering the branch name or commit hash.

2. Convenience:

If you frequently switch between a feature branch and the main or develop branch, or between a commit and your branch, git checkout - saves you from having to repeat the full checkout commands.

3. Simplicity:

For quick, temporary switches (such as testing a fix, reviewing a commit, or updating something on a different branch), you can toggle back and forth without cluttering your workflow or history. You just need to remember to use git stash if you have uncommitted changes that might cause conflicts.

Additional Tips

-

You can combine git checkout - with other Git commands like git rebase or git merge if you frequently move between branches during development.

-

This command is context-aware, meaning it works whether you last checked out a branch, commit, or even tag. It simply returns you to where you came from.

04 Oct 2024

Monitoring is a crucial aspect of maintaining the reliability and performance of any application. In this post, we’ll explore how to integrate Prometheus, a free and open-source monitoring system, with a Ruby on Rails application for observability. We’ll cover tracking HTTP requests and exceptions, visualizing metrics, and setting up alerts, all using open-source tools.

What is Prometheus?

Prometheus is a powerful monitoring and alerting toolkit designed for recording real-time metrics in a time-series database. It allows you to scrape metrics from your application and provides a flexible query language, PromQL, for analyzing them.

Setting Up Prometheus with Rails

To integrate Prometheus into your Rails application, you will need to follow these steps:

1. Install the prometheus-client Gem

Add the prometheus-client gem to your Gemfile:

Then run:

Create a new initializer (e.g., config/initializers/prometheus_metrics.rb) and set up your metrics:

require "prometheus/client"

prometheus = Prometheus::Client.registry

# Counter for HTTP requests

http_requests = prometheus.counter(:http_requests_total, docstring: "A counter of HTTP requests made.", labels: [:method, :path])

# Counter for exceptions

http_exceptions = prometheus.counter(:http_exceptions_total, docstring: "Total number of exceptions raised.")

# Expose metrics for Rails requests

ActiveSupport::Notifications.subscribe("process_action.action_controller") do |name, start, finish, id, payload|

http_requests.increment(labels: { method: payload[:method], path: payload[:path] })

end

3. Handle Exceptions with Notifications

In your ApplicationController, use ActiveSupport Notifications to capture exceptions:

# app/controllers/application_controller.rb

class ApplicationController < ActionController::Base

# Emit a notification for unhandled exceptions

rescue_from StandardError do |exception|

# Notify about the exception

ActiveSupport::Notifications.instrument("exception.action_controller", exception: exception)

# Optionally log the exception

Rails.logger.error(exception.message)

# Render a generic error message

render plain: 'Internal Server Error', status: :internal_server_error

end

end

And subscribe to this notification in your initializer:

# Subscribe to the exception notification

ActiveSupport::Notifications.subscribe("exception.action_controller") do |name, start, finish, id, payload|

http_exceptions.increment

end

4. Expose a Metrics Endpoint

To collect and expose your application’s metrics efficiently, you can use Prometheus middleware in your Rack configuration. This middleware automatically handles metrics collection and exposure, making it easy to get started.

# config.ru

require 'rack'

require 'prometheus/middleware/collector'

require 'prometheus/middleware/exporter'

use Rack::Deflater

use Prometheus::Middleware::Collector

use Prometheus::Middleware::Exporter

run Rails.application

With this setup, the middleware will automatically expose your Prometheus metrics at the /metrics endpoint, allowing Prometheus to scrape the data without the need for additional controller actions.

Benefits of Using Standardized Metrics

-

Automatic Collection: The middleware collects standardized metrics such as request counts, response times, and error rates without any manual coding required. This minimizes the chances of errors in metric implementation and ensures consistency across different applications.

-

Simplicity: By leveraging standardized metrics, you can avoid the overhead of managing custom metrics unless absolutely necessary, allowing you to focus on your application’s core functionality.

When to Use Custom Metrics

While standardized metrics cover many common use cases, there may be instances where custom metrics are essential to capture specific aspects of your application’s performance or business logic.

-

Tailored Insights: Custom metrics allow you to track unique user interactions or application performance indicators that standardized metrics might not address.

-

Greater Flexibility: Implementing custom metrics provides you the flexibility to adapt your monitoring strategy as your application evolves. However, keep in mind that this approach may require additional maintenance and careful planning to ensure that the metrics remain relevant and accurately reflect your application’s behavior.

In summary, using the middleware to expose a /metrics endpoint provides a quick and efficient way to leverage the power of Prometheus in your Rails application, whether through standardized metrics or tailored custom ones.

5. Start Prometheus

Make sure you have Prometheus installed and running. Use a configuration file (e.g., prometheus.yml) to define your scrape configuration:

scrape_configs:

- job_name: 'rails_app'

static_configs:

- targets: ['localhost:3000'] # Adjust the port if needed

6. Access the Prometheus Web Interface

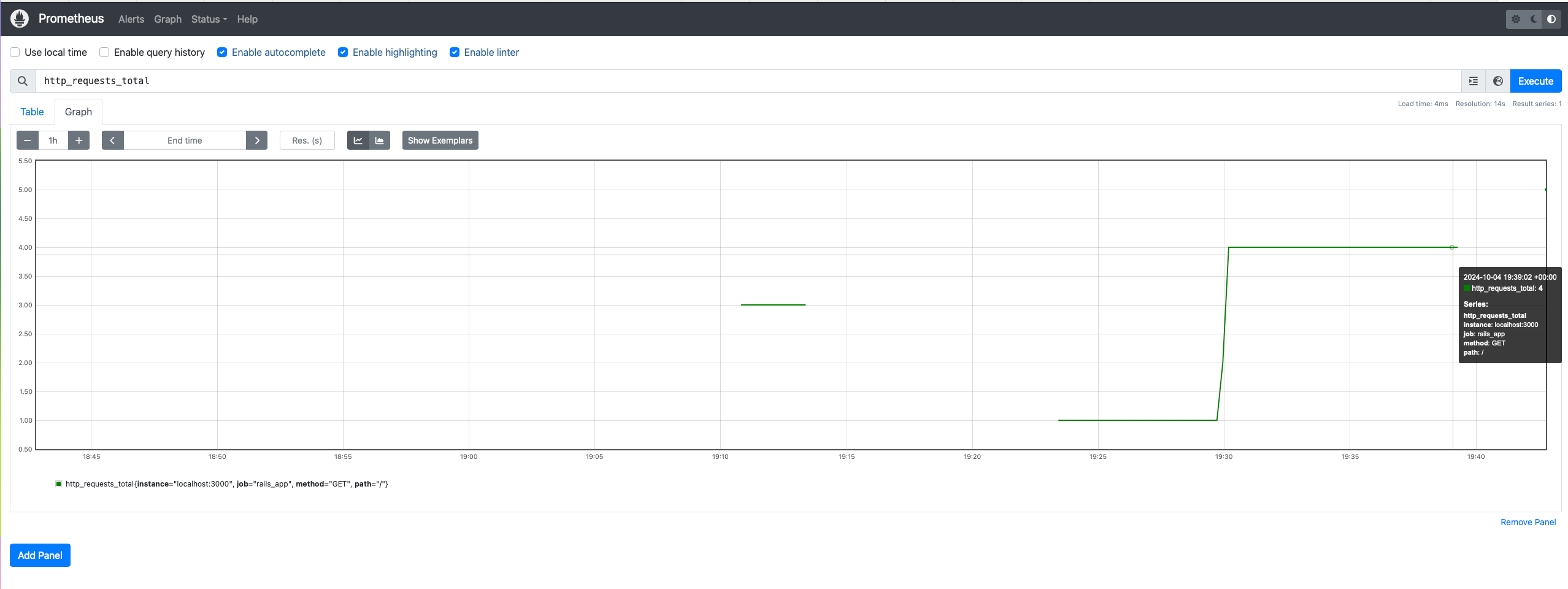

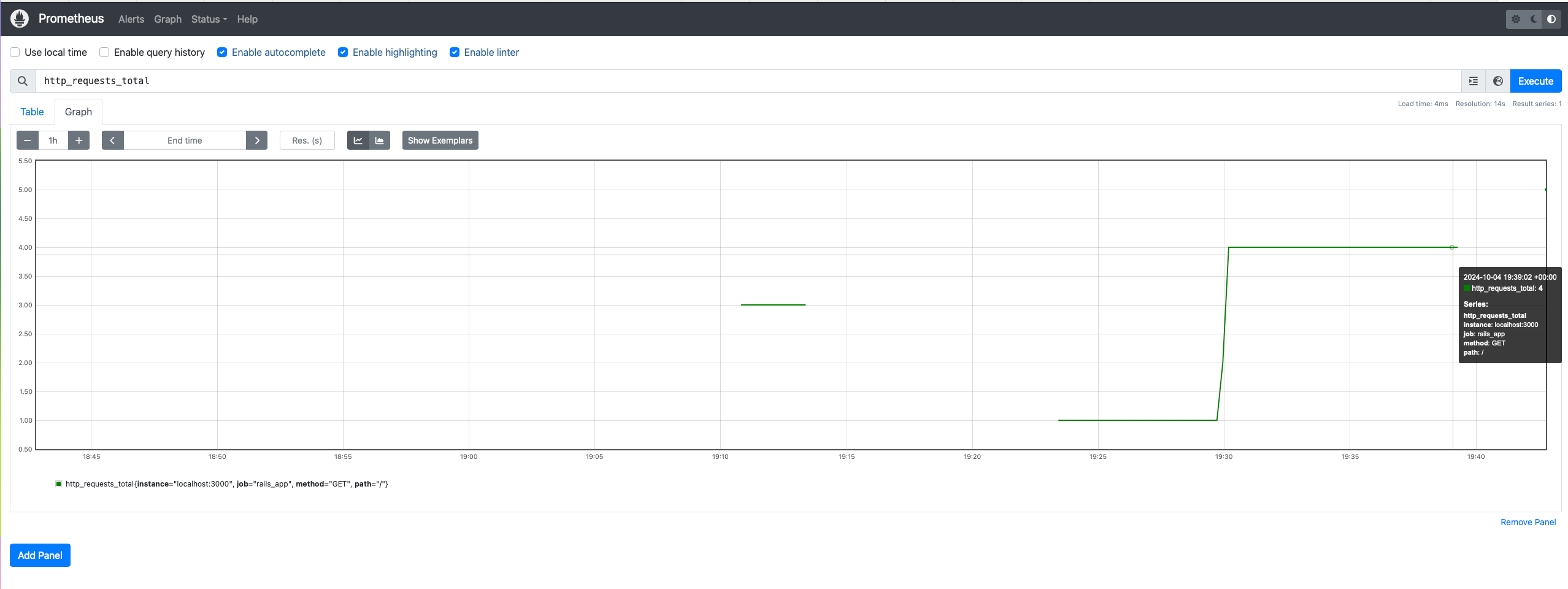

Prometheus provides a built-in web interface to view metrics. You can access it at http://localhost:9090. Use the Graph tab to query metrics using PromQL.

7. Setting Up Grafana (Optional)

While Prometheus has a web interface, many users prefer to visualize metrics using Grafana. You can find installation instructions on the Grafana website.

Start Grafana on a different port (e.g., 3001 set in the grafana.ini or defaults.ini if you run rails on 3000):

grafana server # you will have to login with default user and pass admin/admin

Add Prometheus as a data source in Grafana by navigating to Configuration > Data Sources and selecting Prometheus.

8. Alerts with Prometheus

To set up alerts, define alerting rules in your Prometheus configuration. For instance, you can create an alert for exceptions:

groups:

- name: alerting_rules

rules:

- alert: HighHttpExceptions

expr: http_exceptions_total > 10

for: 5m

labels:

severity: critical

annotations:

summary: "High number of HTTP exceptions"

description: "More than 10 exceptions in the last 5 minutes."

Viewing Metrics Without Grafana

You can view Prometheus metrics directly without Grafana. Use the Prometheus web interface to run queries and visualize metrics as graphs. Simply navigate to http://localhost:9090 and explore the metrics.

03 Oct 2024

If you’ve interacted with an AI language model, you’ve probably noticed that certain words or phrases tend to come up again and again. One word that often appears is “delve” — as in “let’s delve into this topic.” But why does AI “like” to use certain words so much? The answer lies in how these models generate text and, more specifically, in the processes known as greedy decoding and stochastic sampling.

The Basics of AI Text Generation

AI language models, like GPT, generate text by predicting the next word in a sequence based on previous words. The goal is to model the probability of the next word given the context. Formally, this looks something like this:

P(x_t | x_1, x_2, ..., x_t-1)

In plain terms, the model predicts the probability of the next word (x_t) based on all the words that came before it (x_1, x_2, ..., x_t-1). To actually generate text, we need a strategy for choosing the next word. This is where greedy decoding and stochastic sampling come into play.

Greedy Decoding

In greedy decoding, the model always picks the word with the highest probability at each step. This results in a very focused and deterministic sequence of text. While this can be useful for ensuring coherence, it tends to make the output repetitive or overly cautious — which explains why certain phrases like “delve” might get overused.

Here’s an Elixir example of greedy decoding:

defmodule GreedyDecoder do

def predict_next_word(tokens, model) do

tokens

|> Enum.map(&model.(&1))

|> Enum.max_by(fn {_, probability} -> probability end)

|> elem(0)

end

end

# Example usage

vocab = ["explore", "delve", "analyze", "investigate"]

model = fn token -> Enum.zip(vocab, [0.6, 0.3, 0.05, 0.05]) end

tokens = ["The", "researcher", "decided", "to"]

GreedyDecoder.predict_next_word(tokens, model)

# Output: "explore"

In this case, the word with the highest probability (“explore”) is always selected. If “delve” has a consistently high probability in similar contexts, you’ll see it again and again.

Stochastic Sampling

Stochastic sampling, on the other hand, introduces some randomness. Instead of always picking the word with the highest probability, the model samples from the probability distribution — meaning that words with lower probabilities still have a chance to be chosen. This method encourages more variety and creativity in the generated text.

Here’s how you could implement stochastic sampling in Elixir:

defmodule StochasticDecoder do

def predict_next_word(tokens, model) do

tokens

|> Enum.map(&model.(&1))

|> Enum.reduce([], fn {word, prob}, acc -> acc ++ List.duplicate(word, round(prob * 100)) end)

|> Enum.shuffle()

|> List.first()

end

end

# Example usage

vocab = ["explore", "delve", "analyze", "investigate"]

model = fn token -> Enum.zip(vocab, [0.6, 0.3, 0.05, 0.05]) end

tokens = ["The", "researcher", "decided", "to"]

StochasticDecoder.predict_next_word(tokens, model)

# Possible outputs: "delve", "explore", "investigate", or "analyze" (depending on the random sampling)

By using stochastic sampling, there’s a chance the AI will pick words other than “explore”, even though it has the highest probability. This can lead to more diverse and creative outputs.

How to Adjust the “Temperature” for More Creative Output

If you want the AI to generate more creative and varied responses, one of the most important parameters to adjust is the temperature.

- Lower temperatures (closer to 0) make the model more deterministic, meaning it will stick to the most probable word choices.

- Higher temperatures (closer to 1) introduce more randomness, encouraging the model to pick less common words and be more inventive.

How to Change the Temperature

In practice, changing the temperature depends on the tool or platform you’re using:

Here’s an example showing how to adjust the temperature in Elixir:

defmodule TemperatureDecoder do

def predict_next_word(tokens, model, temperature \\ 1.0) do

tokens

|> Enum.map(&model.(&1))

|> Enum.map(fn {word, prob} -> {word, :math.pow(prob, 1.0 / temperature)} end)

|> Enum.reduce([], fn {word, prob}, acc -> acc ++ List.duplicate(word, round(prob * 100)) end)

|> Enum.shuffle()

|> List.first()

end

end

# Example usage with temperature

vocab = ["explore", "delve", "analyze", "investigate"]

model = fn token -> Enum.zip(vocab, [0.6, 0.3, 0.05, 0.05]) end

tokens = ["The", "researcher", "decided", "to"]

TemperatureDecoder.predict_next_word(tokens, model, 0.7)

# Output will vary based on the adjusted temperature

Lowering the temperature parameter leads to more predictable outcomes, while raising it adds creativity and variety to the AI’s word choices.

Conclusion

AI language models generate text by predicting the next word based on prior context. Techniques like greedy decoding result in more deterministic, focused text, while stochastic sampling can add variety and creativity. If you’re looking to guide the AI towards more varied, creative outputs, adjusting the temperature is key. Lower temperatures make responses more predictable, while higher temperatures foster creativity by allowing more diverse word choices.

So, why does AI like to “delve” so much? It’s not that the model has a preference, but rather that certain words come up more often in certain contexts due to how probabilities are calculated. By understanding and tweaking decoding strategies, you can have more control over the AI’s output — and maybe even get it to “explore” a little more often than “delve.”